Welp, the last month or so I went down a lot of rabbit holes. I was spinning up local servers, digging into advanced network security, dissecting Euclidian distance, and tweaking complex configuration files that didn't really move the needle. Some great learning, but not great progress. So, here's where I'm at:

Knowledge gained so far

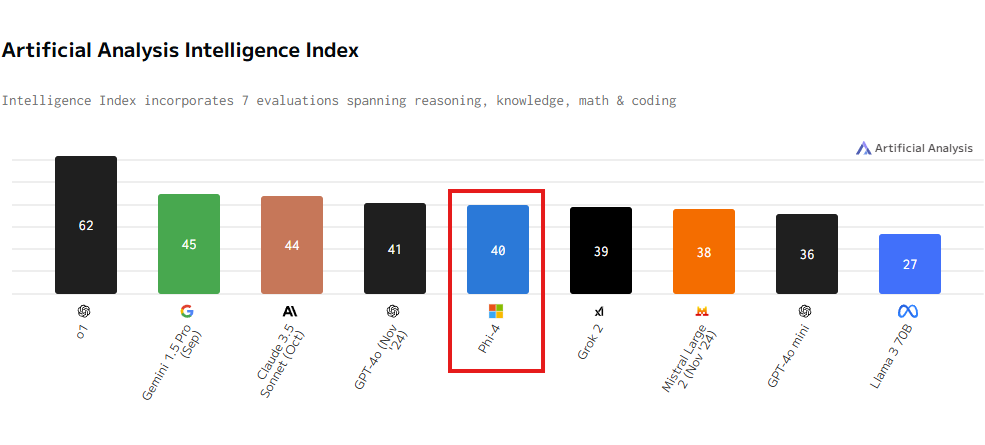

- Model Evaluation & Integration - Testing, benchmarking, and integrating state-of-the-art models from a variety of sources

- Core concepts - Model strengths, prompting, quantization, vector DBs, tensors, agents, MCP, ACP/A2A, and other fundamentals

- Toolchain fluency - Docker, Ollama, HuggingFace, Openwebui, n8n, supabase, various APIs

What I've built

- Goals App - an agent that intelligently updates a database based on natural language input. (think "just read for 20 minutes" or for business, updating CRM notes/fields)

- News Aggregator - workflow that pulls and summarizes targeted data sources (Competitor research, market trends, sentiment analysis, etc...)

- Personal Website - coded entirely via local LLM prompts (although I wouldn't recommend it). Proof that AI can carry a non-coder from zero to live site.

I'll break down each project in it's own post.

Learning From My Mistakes

Going local-only taught me foundations, but trying to self-host everything slowed real progress. I stayed on that track for too long. Also, I spent too many hours researching concepts and frameworks that I will most likely never use.

No flashy new app or benchmark today. I just wanted to give an honest update and to say that I will be more active with some cool, real, projects very soon.